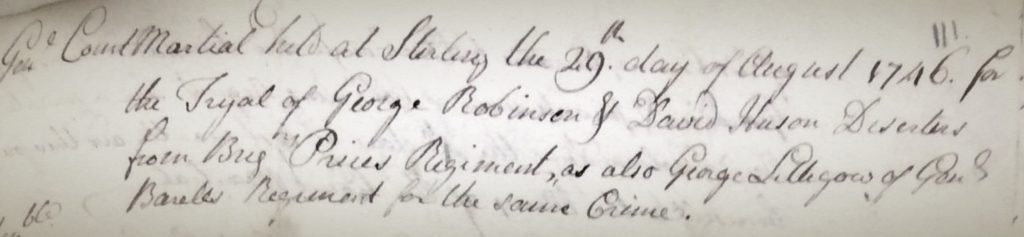

Amidst the complexities of dynastic opposition and civil war during the later Jacobite era, the loyalties and material commitment of individuals were often in flux and have not always been so simple for historians to cleanly define. Allegations of significant Jacobite desertions have long been suspected (and more recently have been examined), but little scholarly enquiry has been made into cases of defection by soldiers within the government forces who were charged with quelling the Jacobite threat in Britain during the ’45.1 Resistance to martial service permeated both sides of the conflict, but deserting ranks to avoid combat is one thing, while joining up with the enemy is another entirely. Archival evidence shows us that soldiers in British service – including loyalist Highlanders on campaign in Scotland – deserted their units in smaller numbers than their Jacobite rivals, but incidents of soldiers breaking ranks was still a problematic issue for British army officers and Hanoverian officials.2 Digging deeper into the sources further reveals that some of these deserters found both cause and motivation to fight amidst the ranks of Jacobite rebels.

Tag: data (page 1 of 2)

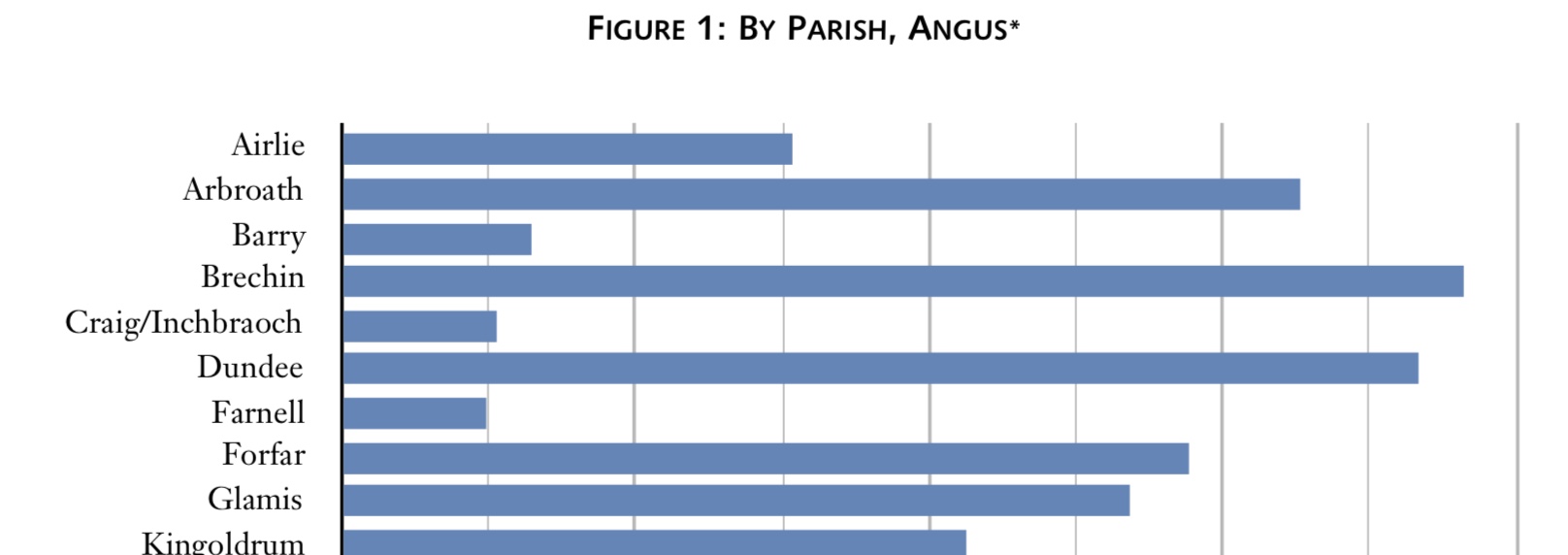

In our previous two posts, we introduced a case study model to demonstrate the utility of JDB1745 and we discussed a possible methodology that will give us more accurate results than what has hitherto been published. Now that we have examined the data’s lineage, established as much objectivity as possible, and implemented authority records in our model of Lord Ogilvy’s regiment, we are ready to take a look at the information and organize it in a way that facilitates the most useful analysis for our needs.1 We know that our assessment will not be comprehensive, as more sources are revealed and further biographical information is entered into the database. Yet we can take a ‘snapshot’ based upon the data that we do currently have. Here is what the numbers look like:

- Mackintosh’s Muster Roll: 628

- Rosebery’s List: 41

- Prisoners of the ’45: 276

- No Quarter Given: 761

To these, a few further sources can be consulted to add yet more names to the overall collection. A document at the National Library of Scotland, for example, contains another twenty two from Ogilvy’s regiment, and 362 more with no particular regimental attribution.2 A broadsheet distributed by the Deputy Queen’s Remembrancer from 24 September 1747 furnishes a list of 243 gentlemen who had been attainted and judged guilty of high treason, some of whom had likely marched with the Forfarshire men.3 Various other documents from NLS and in the Secretary of State Papers (Scotland, Domestic, and Entry Books) at the National Archives in Kew contribute thousands more, as do those from the British Library, Perth & Kinross Archives, Aberdeen City & Aberdeenshire Archives, and dozens of other publicly accessible collections.4 With a baseline collation of the major published sources regarding Lord Ogilvy’s regiment, buttressed by a few other useful manuscript sources, we have a solid corpus of data to examine.

© 2025 Little Rebellions

Modified Hemingway theme by Anders Noren — Up ↑